Blender x Lambdalabs |

Created | ||

|---|---|---|---|

| Updated | |||

| Author | Nicolas Dorriere | Reading | 3 min |

Blender

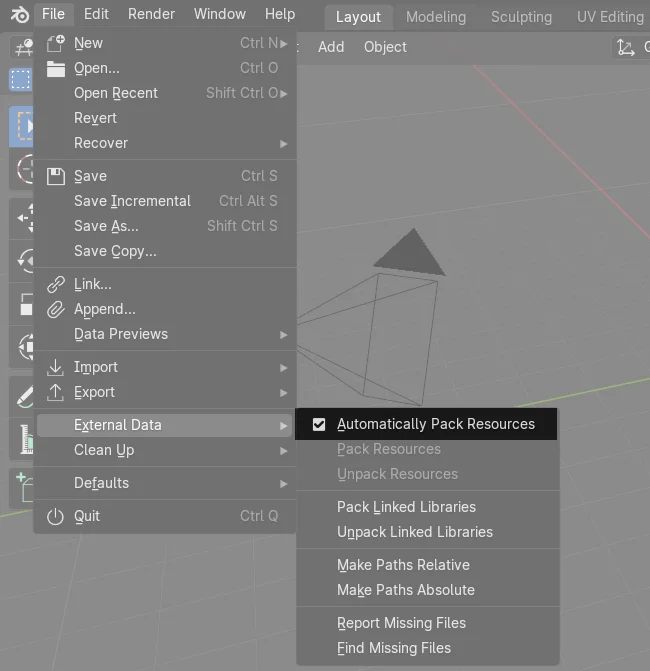

Before starting the rendering on the cloud, you must automatically pack the resources in Blender, otherwise some elements will be without textures, displaying bright pink colors in the rendered scene.

Save the file as myproject.blend and then upload it via SFTP to the Lambdalabs VM.

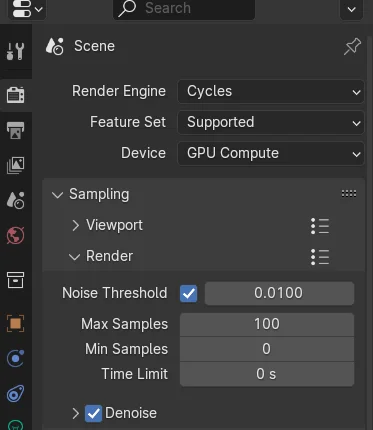

Scene Render : 100 Samples + Denoise. Cycles

Lambdalabs

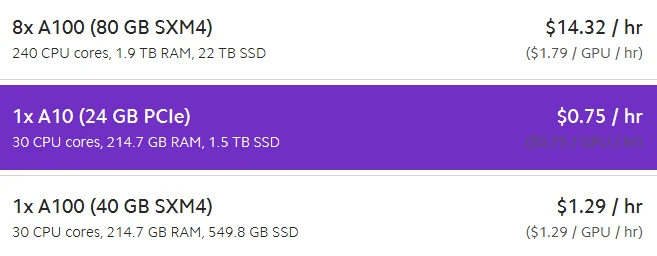

Blender rendering remains very resource-intensive for GPUs. To achieve this, I use the power of cloud GPU. In my opinion, the best candidate remains the Lambdalabs website. They can provide GPU power billed by the hour.

update 26/12/2024 : I just discovered a new cloud provider offering Nvidia cards on demand. Very interesting as they offer Nvidia consumer GPUs cards in the RTX 4xxx series. https://www.primeintellect.ai

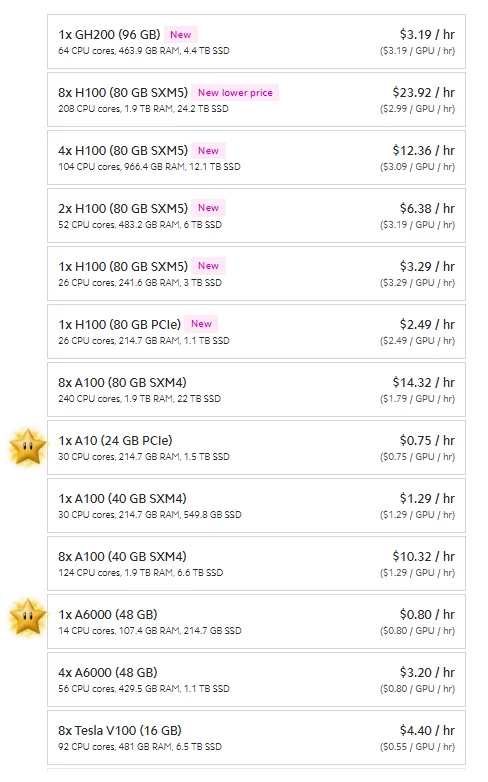

The cards available are not of the general public type, such as RTX 30XX, 40XX, but rather cards like A100, H100, B100, which are often very expensive to use, an H100 costs $2.49 per hour. For Blender, we will stick with A6000 ($0.80/h) and A10 ($0.75/h)

Benchmarks Score

https://opendata.blender.org/benchmarks

| NVIDIA RTX 4070 | 5129 |

| NVIDIA RTX A6000 | 4999 |

| NVIDIA A10 | 3570 |

| NVIDIA RTX 4060 | 3062 |

To get the most out of the cloud, we can use multiple Nvidia cards to render, there is no limit, let's say until the Nvidia VMs on Lambdalabs are exhausted, maybe it's possible to use 10, 50 or even 100 Nvidia cards for Blender rendering.

Practical for hyper-massive renders that require hours with 1 or 2 RTX 4090, and I'm not even talking about the electricity consumption at home. As a reminder, in Germany, on November 23, 2024, the cost is 0.40€ cents per kilowatt hour.

Here is the cost for a 20-second video at 1080 x 1920, 24 FPS, using four A10 cards.

The total price is $5.30

The link to view the video in question : Le Carnaval de Granville 2024.mp4

Lambdalabs VM

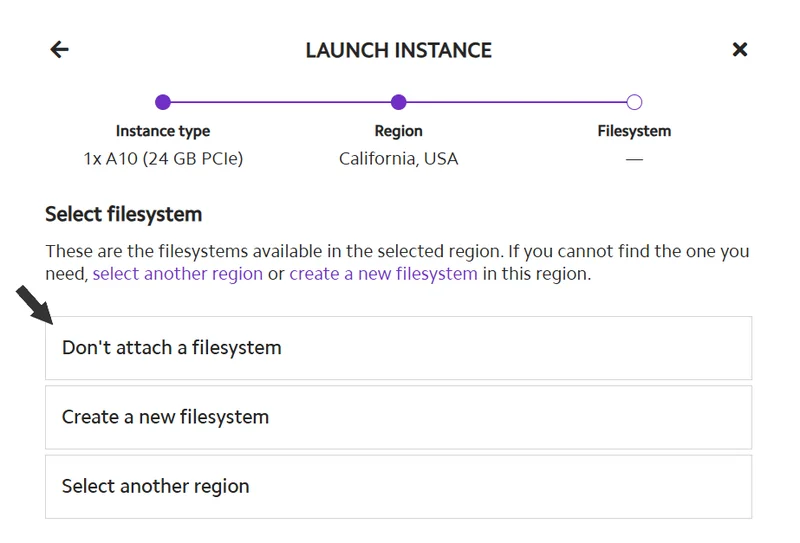

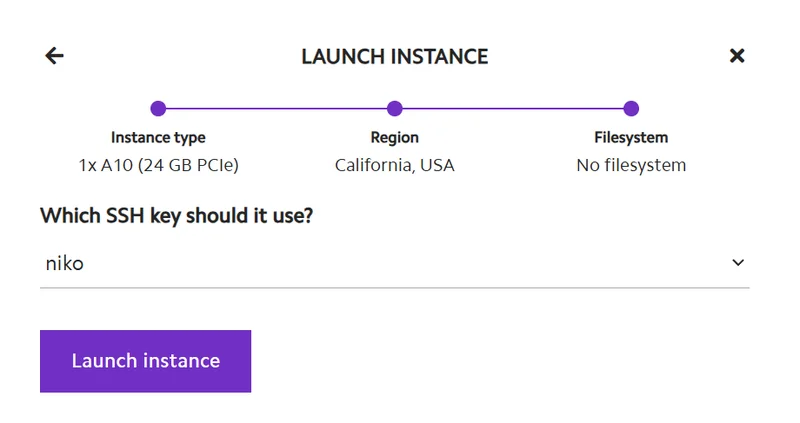

Lambdalabs dashboard init

|

|

|

|

After uploading your public SSH key to the Lambdalabs interface, you can connect to the Ubuntu Nvidia VM on Lambda

$ ssh root@my-lambda-vmInstall classic Blender and set up an alias for convenience

$ sudo apt update -y

$ sudo snap install blender --classic

$ alias blender=/snap/bin/blender⁜ Using a single Lambdalabs VM

For a 10-second film at 30 fps, 300 images

$ blender -b untitled.blend -noaudio -E 'CYCLES' -s 1 -e 300 -a -F 'PNG' -- --cycles-device OPTIXUse OPTIX instead of CUDA with the CYCLES engine.

If the scene has a lot of reflections, rendering time will be 15% faster.

Moreover, I think denoising is done on the GPU when using OPTIX.

⁜ Using multiple Lambdalabs VMs

For a 20-second film at 30 fps, 600 images

On the master machine (machine1), upload the Blender scene and then copy-paste to the other 3 machines using rsync

$ rsync -avz /home/ubuntu/untitled.blend ubuntu@vm2:/home/ubuntu/

$ rsync -avz /home/ubuntu/untitled.blend ubuntu@vm3:/home/ubuntu/

$ rsync -avz /home/ubuntu/untitled.blend ubuntu@vm4:/home/ubuntu/Using 4 VMs to quadruple rendering speed to divide rendering time by 4

$ blender -b untitled.blend -noaudio -E 'CYCLES' -s 1 -e 150 -a -F 'PNG' -- --cycles-device OPTIX

$ blender -b untitled.blend -noaudio -E 'CYCLES' -s 151 -e 300 -a -F 'PNG' -- --cycles-device OPTIX

$ blender -b untitled.blend -noaudio -E 'CYCLES' -s 301 -e 450 -a -F 'PNG' -- --cycles-device OPTIX

$ blender -b untitled.blend -noaudio -E 'CYCLES' -s 451 -e 600 -a -F 'PNG' -- --cycles-device OPTIXCopy images from VM1, VM2, VM3 to VM4 for ffmpeg rendering

$ rsync -avz /tmp/*.png ubuntu@VM4:/tmp (VM1)

$ rsync -avz /tmp/*.png ubuntu@VM4:/tmp (VM2)

$ rsync -avz /tmp/*.png ubuntu@VM4:/tmp (VM3)

$ I receive all the images (VM4)FFMPEG

Compile 600 images at 30fps into a video in a fraction of a second with ffmpeg and H264 encoding on VM4

$ cd /tmp

$ ffmpeg -framerate 30 -i %04d.png -c:v libx264 -pix_fmt yuv420p output.mp4Monitoring

$ sudo apt install nvtop

$ sudo nvtop

$ watch -n0 nvidia-smiCreation

Voir la galerie : https://nicolas-dorriere.fr/blender.html