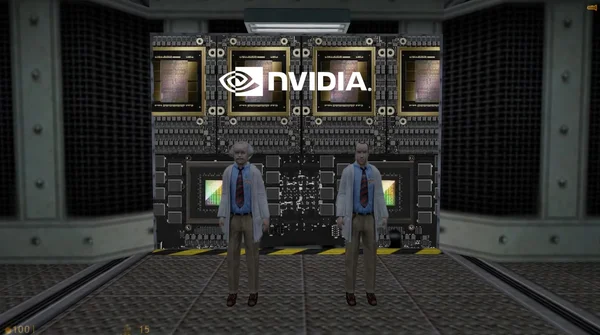

Words or tokens are represented as high-dimensional vectors (embeddings). Matrix operations (matrix multiplications, transformer attention) dominate the training and inference of models. These computations require massive and parallel computing power, similar to that used in 3D graphics.