The Wi-Fi attack surface remains quite large in 2025. Remove old SSID connections and disable auto-connect.

Rue Montgallet - On ne rembourse pas !

AI workflow ?

Frontend = Cursor Sonnet 4.5

Backend = Claude Code Opus 4.1(plan) & Sonnet 4.5

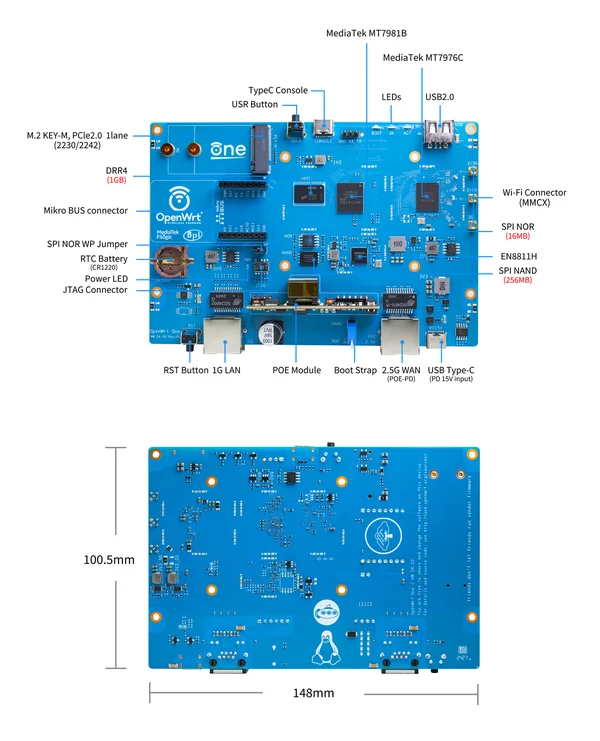

Should I put the OpenWRT One router in my homelab? It’s really tempting me right now. He uses a Mediatek WiFi chipset, and phew, no Broadcom (hello Raspberry Pi).

For now, I’m using the router(modem) provided by Orange with their Livebox. The thing is, Orange doesn’t offer a Bridge mode like Freebox does

AI

Overview of API provision and proxies for consumption.

Generally, avoid unified API platforms — they are very expensive. It's better to consume APIs directly from the source, such as the creators of frontier models like Mistral, Anthropic, etc.

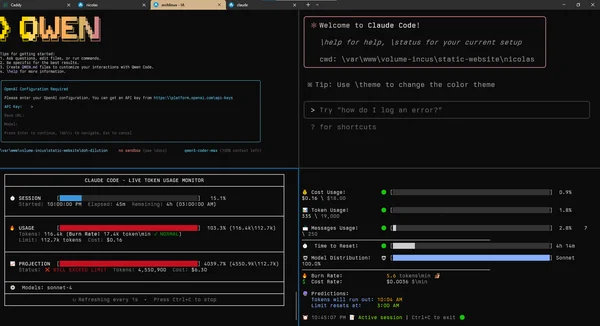

TUIs are also gaining popularity in the summer of 2025. The major creators of frontier models all have their own TUIs. I prefer using them because they are much lighter and more minimalist in approach. Moreover, the models are often nerfed and distilled in Cursor or Windsurf, with smaller context windows

I find it's the most ideal way to consume APIs Inference is TUI. Nicolas

✶ API Inference

pure 🟢

api.anthropic.com/v1

api.mistral.ai/v1

api.deepseek.com/v1

api.moonshot.ai/v1

generativelanguage.googleapis.com

unified 🔴

Openrouter

Eden AI

LiteLLM

TogetherAI

✶ API Inference Proxy

TUI 🟢

Claude code

Qwen code

Gemini cli

Crush

Opencode

Anon Kode

Aider

GUI 🔴

Cursor

Windsurf

Trae

Kiro

GUI 🔴 (vscode extension)

Cline

Kilo Code

Roocode

Continue

Mistral Code

Augmentcode

Keep Thinking

Personally, I think this is the best place for a code-oriented agent like Claude or Qwen to have a terminal as its interface. No friction, no heavy IDE client. You just type 'claude', 'qwen' and boom — the entire folder context is loaded with a simple prompt interface. It's art: minimalist, simple, just the way I like it.

The Open-Source Chinese AI Gang

Qwen - Kimi - DeepSeek

🇨🇳

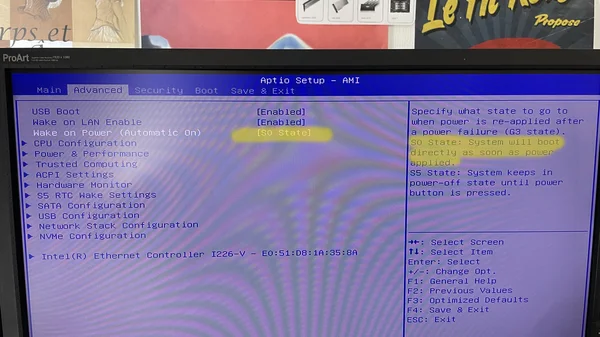

In case of a power outage, the GMKtec G3+ mini PC can reboot automatically by setting the value to S0.

How to Move Back On-Prem

It was probably dns 😂

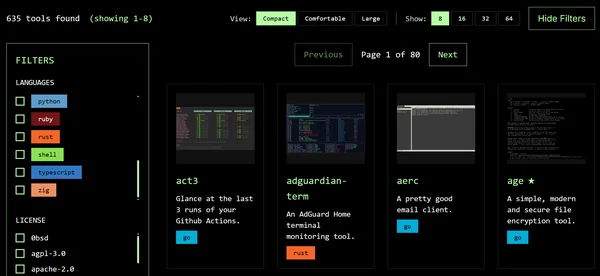

A curated site of TUIs

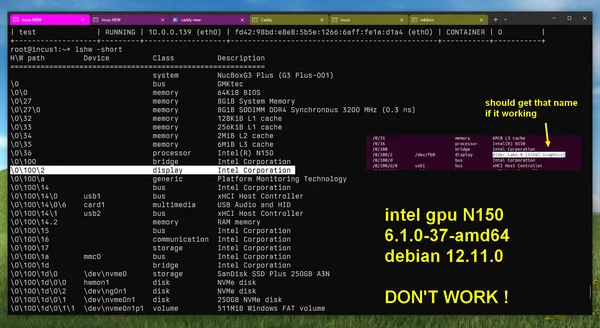

While attempting to enable GPU hardware acceleration in LXC containers using Incus, I encountered a significant roadblock: Debian 12's kernel 6.1 lacks support for the Intel N150 GPU, making hardware acceleration impossible.

This led me to explore bleeding-edge distributions like ArchLinux. I tested vanilla ArchLinux 2025.07.01 with kernel 6.15.4, and the GPU support worked flawlessly out of the box.

My BambuLab A1

State of the art printer

Warning: the 'Lexar SODIMM RAM DDR4 16GB, 3200 MHz' model is not compatible with the GMKtec G3+ N150. The Crucial CT16G4SFRA32A, however, works perfectly

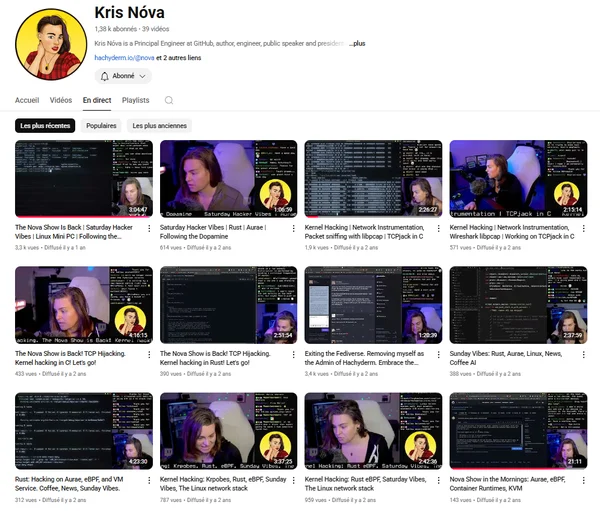

Kris Nóva streams

STEM models

Science, technology, engineering, and mathematics

- Grok4

- o3-pro

- DeepSeekR1

Attention, Debian version 12.11 comes with the 6.1.0-37 kernel, which does not support the GPU of the Intel N150. I’m considering switching to Arch Linux on this GMKtec G3+ mini PC in order to get a more recent kernel version and thus benefit from GPU support.

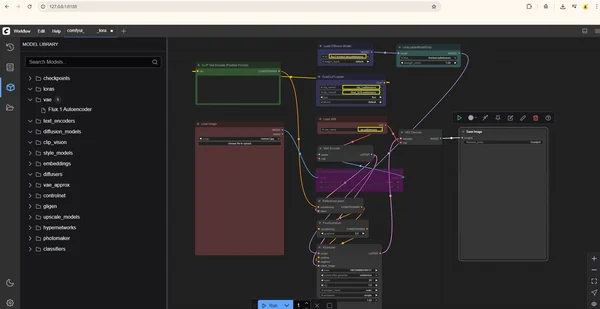

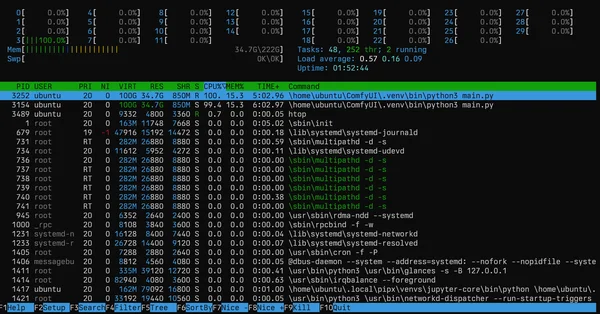

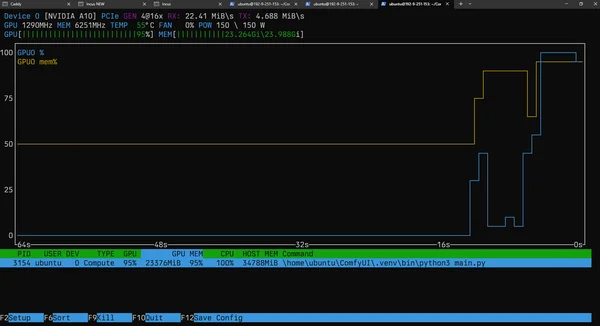

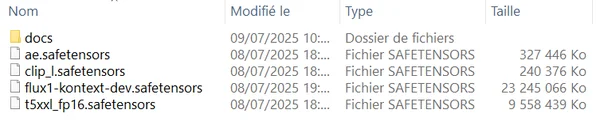

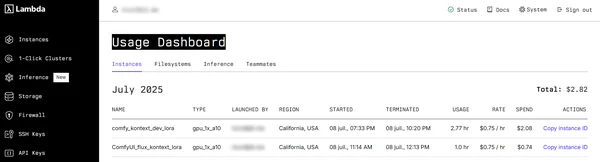

Using the raw Flux Kontext Dev open-weight model of 23GB with a LoRa on Lambda.ai cloud GPU.

I have the models stored on my own hard drive, and I upload them directly to the Lambda instance via Filebrowser through an SSH port forward on port 8080. I also expose the ComfyUI dashboard through the tunnel on port 8188.

32GB VRAM --> Full-speed, full-precision model (24Go)